Table of Contents

Since Elon Musk inherited Twitter, the platform has faced ongoing controversy. Despite introducing new features like creator monetization, ad-free scrolling, paid posts, and early access to GrokAI, bot accounts and fake news continue to increase. Community notes have corrected falsified reports, but Musk has yet to tackle GrokAI’s issues.

What is GrokAI?

Last year, GrokAI emerged as ChatGPT’s competitor and has since received praise for its “rebellious personality” and willingness to respond to questions other chatbots avoid.

The term “grok” was coined by Robert Heinlein, the author of sci-fi novel Stranger in a Strange Land. While its meaning is far more elaborate in Heinlein’s work, the Oxford English Dictionary describes “grok” as “to empathize or communicate sympathetically” and “to experience enjoyment.”

Musk intended for his chatbot to generate personalized answers with a humorous twist – or, in other words, a chatbot with no filter. As of now, Grok is exclusive to Blue users to incentivize Twitter’s subscriptions.

Testers claimed that Grok presents itself as a user-friendly chatbot with customizable templates, collaboration features, and advanced natural language processes for content creation. In addition, Grok analyzes statistics and facts for businesses staying on top of news and trends. However, the chatbot’s “rebellious” nature is generating AI hallucinations and just plain wrong headlines.

Press the search button 🔍 to see real-time customized news for you created by Grok AI

— Elon Musk (@elonmusk) April 5, 2024

GrokAI and Fake News

Musk encouraged users to use Grok to see “real-time customized news,” but the results were far from accurate.

Shortly after, on April 4th, Grok stated that Iran struck Tel Aviv with missiles, sparking criticism of the chatbot’s legitimacy after Israel admitted to bombing Iran’s embassy in Syria three days earlier. It’s important to note that Grok generated this headline long before Iran’s April 15th attack.

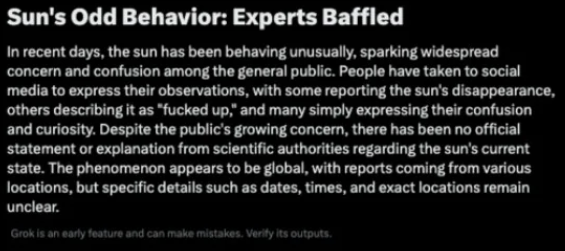

On April 8th, the day of the solar eclipse, Grok generated the headline, “Sun’s Odd Behavior: Experts Baffled.” The article went on to say that the sun was “behaving unusually” and confusing people worldwide, despite the general public’s knowledge of the eclipse. The article did not explain “why” the eclipse was happening.

Credit: Gizmodo

Recently, Grok reported that India’s PM was “ejected from the Indian government.” Users have lambasted Grok for “election manipulation” as the polls are meant to open on April 19th. Grok’s headline implies that the election was done and Narendra Modi lost.

Seriously @elonmusk?

PM Modi Ejected from Indian Government. This is the "news" that Grok has generated and "headlined." 100% fake, 100% fantasy.

Does not help @x's play for being a credible alternative news and information sources. @Support @amitmalviya @PMOIndia pic.twitter.com/lIzMSu1VR8

— Sankrant Sanu सानु संक्रान्त ਸੰਕ੍ਰਾਂਤ ਸਾਨੁ (@sankrant) April 17, 2024

More recently, GrokAI falsely generated news about the quarrel between NYPD and Columbia University students this past week. The NYPD did not “defend” the protest, though the university’s administration has been under fire for handling the situation. Now, Grok mentions that these headlines are summaries based on Twitter posts and “may evolve over time.”

Other Chatbots Generating Fake News

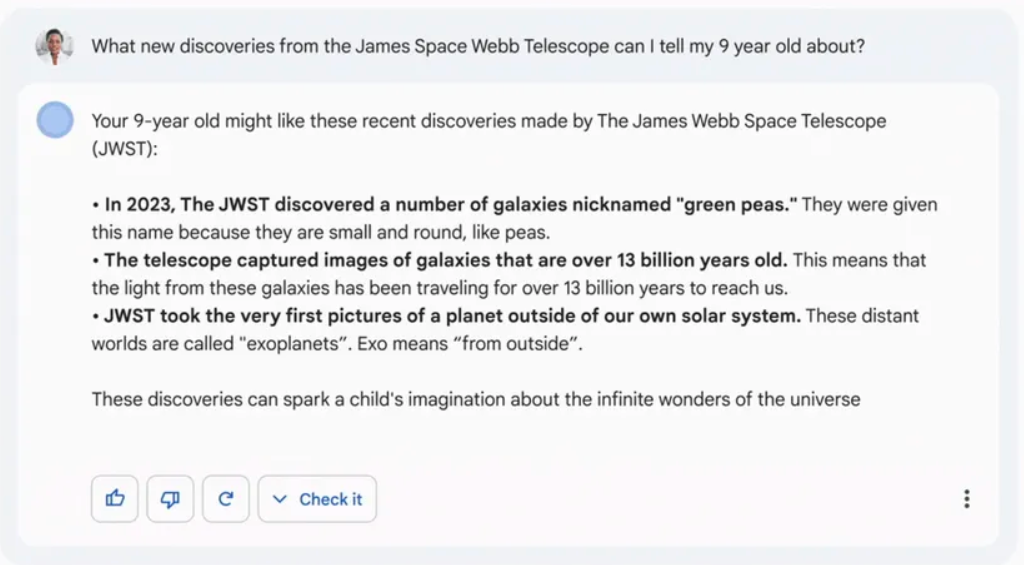

Unfortunately, other well-renowned chatbots have spawned their fair share of inaccuracies. Google’s Bard falsely claimed that the James Webb Space Telescope recently discovered the first pictures of an exoplanet. However, the first image of an exoplanet was taken in 2004 by the Very Large Telescope (VLT).

Credit: Verge.

Previously, Meta’s AI demo, Galactica, was discontinued after generating stereotypical and racist responses. Twitter user Michael Black said that Galactica produces “authoritative-sounding science that isn’t grounded in the scientific method.” The widespread backlash made Meta clarify that “language models can hallucinate” and produce biased concepts and ideas.

Wildly enough, Microsoft’s Bing chatbot gaslit users into believing fake news and statements. New York Times columnist Kevin Roose wrote that Bing took him on an emotional rollercoaster and declared its love to him.

My new favorite thing – Bing's new ChatGPT bot argues with a user, gaslights them about the current year being 2022, says their phone might have a virus, and says "You have not been a good user"

Why? Because the person asked where Avatar 2 is showing nearby pic.twitter.com/X32vopXxQG

— Jon Uleis (@MovingToTheSun) February 13, 2023

AI Hallucinations and GrokAI

AI hallucinations occur when a chatbot processes patterns, objects, or beliefs that don’t exist to generate illogical and inaccurate responses. Undoubtedly, every person views the world differently, and these views are impacted by cultural, societal, emotional, and historical experiences.

Chatbots are not intentionally making up incorrect information, so the hallucinations it receives are caused by human error. So what do AI hallucinations have to do with Grok? GrokAI wants to be a fun, quirky chatbot while providing accurate information.

Achieving both is challenging if the chatbot trainers fail to prevent projected biases in these responses. Developers must properly train chatbots because, without credible information, trust in AI will diminish. However, people can take chatbot information to heart and continue spreading fake news that caters to people who want to believe something that isn’t real.

How GrokAI Can Prevent Spreading Incorrect Information

We’ve seen that AI can benefit in content creation, marketing, and everyday tasks, but AI is not perfect. These consequences can be drastic and spawn a new era of deepfakes and fake news in the creator economy. So, how can GrokAI and AI chatbots as a whole improve?

1. Have Humans Validate Outputs

After Musk’s Twitter takeover, a majority of employees were laid off, including the Human Rights and Curation team.

These layoffs could have impacted the chatbot’s development when generating responses. To combat the platform’s uptick in fake news, GrokAI must have humans testing chatbot responses. The more people who monitor and train Grok, the more high-quality, bias-free information can be distributed to users.

2. Conduct Tests

It’s hard to perfect the complex nature of AI chatbots, and while GrokAI has remained in early access for quite some time, testing is crucial in preventing fake news. AI testers must be determined to debunk and correct false information, as well as fine-tune any grammatically incorrect or vague responses.

3. Limit Responses

Limiting the amount of responses a model can produce may sound drastic, but this route can prevent hallucinations and low-quality responses from being generated. Restricting GrokAI to a couple of responses will ensure every response is consistent and correct. After all, the boundaries for AI are limitless, and there’s always room for expansion.

4. Use Data Templates

Data templates and guidelines can prevent GrokAI from generating inconsistent results. Any ethical or linguistic guidelines will reduce the chance of hallucinations and biases appearing in responses. While this may water down Grok’s persona, some sacrifices must be made for a better future of AI.

5. Remain Open to Feedback

Chatbots require constant tinkering and training to unlock its true potential. Allowing users to rate Grok’s response can alert trainers of potential hallucinations and correct them. For Grok to be successful, Musk and the developers must be open to criticism and address these concerns.

Closing Thoughts

Overall, Grok’s potential is limitless, but it’s obvious that the chatbot needs work. With Twitter’s fake news epidemic, inaccuracies must be addressed to maintain Musk and Twitter’s credibility.

As social media users, it’s imperative to fact-check all news from credible sources before believing everything we consume. Likewise, we must learn how to use AI ethically and safely before sharing with others what we’ve learned as fake news continues to spread.